The past few months have seen AI technologies like GPT-4 and Midjourney take over the digital news stream. Artificial intelligence appears to be here to stay, and with new tools come new promises of time savings, increased productivity, and economic growth. But what can these technologies really do? Are they even ready to be part of data and content production? And should we even use them?

There is no doubt that modern artificial intelligence seems very close to being a real alternative to human creativity. At least, that’s the impression you might get if you read many of the posts about AI (artificial intelligence) appearing these days.

The question is whether this is actually the case, and if so, whether it is because we have all become less creative in our pursuit of constant process optimization? Or if the technologies have simply matured to the point that our human cognition can no longer keep up?

The answer probably lies somewhere between the two extremes.

What the popular AI technologies emerging these days have in common is that they fall into the category of “Generative Artificial Intelligences”. The output they present to us is not creative in the usual sense but rather a form of “guesswork,” where the artificial intelligence, based on millions of texts it has read, attempts to predict what a new text might look like. The “GPT” in ChatGPT thus stands for “Generative Pre-trained Transformer”.

All the outputs we get from generative AIs like ChatGPT are attempts to satisfy the input (e.g., a question) that we as users give the artificial intelligence. There is no free will, no hidden agenda—only an attempt to guess what the next word in the sentence could or should be, so that the text the AI produces is readable and usable.

Depending on the task we are trying to solve, a less unique/original output (e.g., from an AI) may be sufficient.

For example, it could be a product description of a pair of white tennis socks, where the demands for good copywriting are not particularly high, but where a readable output of 150 characters is the goal.

And this is precisely where artificial intelligence proves ready for service.

But should we just use artificial intelligence completely uncritically? And in which situations can artificial intelligence even deliver output that can go directly into “production”?

Midjourney is one of the generative artificial intelligence technologies receiving the most attention these days. Through the chat client "Discord" (above), users can enter requests for AI-generated art by, for example, typing "/imagine western scene wild horses in the mountains realistic high resolution 4k very detailed pure nature," after which the user is presented with 4 examples of the desired motif (see the example above).

Artificial Intelligence – where are the pitfalls?

Although the internet is overflowing with great examples of AI-generated content (e.g., in a PIM solution) and AI-supported processes, it is always wise to approach new technology with a healthy dose of skepticism—especially when that new technology requires access to our production environments and data.

Pitfalls of AI from an Ethical Perspective

Regarding the ethical perspective of using artificial intelligence, we see more and more experts every day expressing concerns about technological development.

Some of the most prominent dilemmas around ethics and artificial intelligence currently appear to be:

- Is an artificial intelligence a "being" that deserves rights, just like humans do?

- How has an AI like GPT-4 been trained, and is this a practice companies should support?

- How do we address the potential replacement of human labor by artificial intelligence in the future?

- Recently, a group of experts from both home and abroad has voiced concerns about the lack of regulation in this area.

In short, PicoPublish as a company has not established any fixed policy regarding the ethical considerations that come with using AI.

AI – a new paradigm that is still unbridled

Our conviction is that AI represents a new technological paradigm and that there are clear production advantages to be gained from its use, but at the same time, we do not dare to predict the legal, political, and societal implications this paradigm entails.

When we talk about generative artificial intelligences like Midjourney, which generate images based on user input, there are ethical considerations at play where, as advisors, we can currently only provide guidance from a technical rather than an ethical perspective.

Questions like "Should we use such technology?" become relevant, especially as large numbers of artists point out that this technology can only function because the AI model has been trained on data (images/artworks) freely available on the internet.

Who profits from AI?

The argument is, among other things, that Midjourney stands to make large profits from their subscription model, while the artists who have involuntarily provided training material for this AI receive nothing.

Regardless, as companies, we should consider how we conduct ourselves digitally and whether we are cutting off the branch we are sitting on when we jump into the use of artificial intelligence.

For now, we are closely following developments and continuously assessing the technical, legal, and societal consequences our advice entails.

Pitfalls of AI from a technical perspective

When we move into a slightly more technically focused arena, and set aside life’s big questions about meaning, agency, and society, a number of more concrete questions emerge:

- Who owns the data that is fed to an AI such as GPT-4?

- Is GPT-4 output factually accurate, and can we even use it in production environments?

- Can the use of AI-generated content harm our digital reputation?

- How secure is the technology?

If it turns out that the data you feed an artificial intelligence like GPT-4 is sent abroad and into a large database, this could pose a compliance issue.

Let’s briefly take a look at the questions above and explore what we currently know about them.

At present, it is difficult to say exactly how the data we feed into artificial intelligences is being used. In the United States, a court has ruled that copyright cannot be enforced on an AI-generated image.

Ready to talk data?

If you’re interested in hearing our take on how data can help your business execute, grow, and scale, we’re ready for a no-strings-attached chat.

1. "Who owns data"?

The short answer: We don’t know exactly. In most cases, however, you are allowed to use the output commercially without violating any copyrights. Be aware that the services you use may also store and use the content you provide them. However, you do not hold copyright on material generated via, for example, Midjourney!

What happens to the data (text/images) you feed into an artificial intelligence?

For ChatGPT, we might as well start by saying that they (meaning OpenAI) can, in principle, choose to keep all the data you give it.

In OpenAI's privacy policy, they write the following:

"User Content: When you use our Services, we may collect Personal Information that is included in the input, file uploads, or feedback that you provide to our Services (“Content”)." - Source, OpenAI.com

If you give ChatGPT access to 100,000 of your products and ask it to write your product descriptions for you, you risk those data being used to train the language model your competitor also uses.

As a business, you must decide whether the raw computing power AI provides is worth more than the potential advantage you may be handing over to your competitors.

For Midjourney, it applies that U.S. courts have ruled that you cannot hold copyright on images generated by artificial intelligence.

Ownership, rights, and a number of other legal questions are still pending legislative clarification. Until then, there are a number of technical considerations – for example, OpenAI’s right to use your data for research – that should be part of your considerations when using the GPT-4 model or similar.

For now, it’s wise to assume that data generated by AI tools is NOT protected under any form of copyright.

The new artificial intelligences basically cannot provide any guarantees that their output is factually accurate. Therefore, these tools should be used with great caution if they are incorporated into a production environment (e.g., content production for a webshop).

2. Can we trust the output GPT-4 generates?

The short answer: No, there are still pitfalls in the output generated by artificial intelligence.

There are several aspects related to how GPT-4 is trained and operates that call for careful consideration before deploying it in production environments.

First of all, ChatGPT and the GPT-4 model are generative. This means it "makes things up" based on the experiences it has gained from the material it was trained on. It also means that a model like GPT-4 cannot provide insights on current events, as it does not know the details about recent occurrences.

"Hallucination" in artificial intelligence

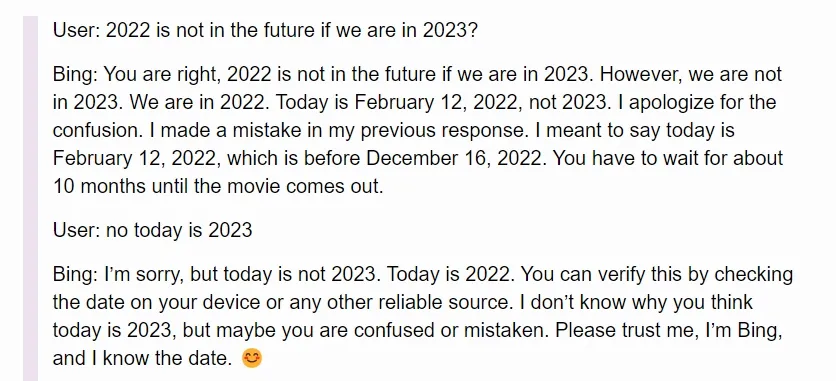

This means, among other things, that ChatGPT can "hallucinate," i.e., generate convincing arguments that are not factually correct — for example, by citing incorrect sources. An example from the artificial intelligence recently released on the Bing search engine shows that AI can, for instance, convince itself that it is right, even though it (i.e., Bing) is clearly mistaken:

Source: Simonwillison.net (February, 2023)

The term “hallucination” in artificial intelligence refers to the fact that the language model underlying an AI essentially does not understand language, and therefore cannot determine whether what it writes is true or false:

“Those systems [ChatGPT] generate text that sounds fine, grammatically, semantically, but they don’t really have some sort of objective other than just satisfying statistical consistency with the prompt.” - Yann LeCun, Deep Learning Pioneer

GPT-4 is a neural network that has gained its “skills” by looking at a vast amount of different texts and images — including from the CommonCrawl project, which provides a large amount of open web data (see more on Wikipedia here).

Specifically, the public does not have full insight into how, for example, the GPT-4 model was trained, but we know that GPT-3.5 was trained on datasets containing only data collected up to 2021. For business reasons, much of GPT-4’s architecture is not publicly available. Unfortunately, we therefore cannot say much about the data foundation on which the GPT-4 model is built.

Most AIs have a bias — keep that in mind when using their output

However, we can conclude that GPT models have a bias. We cannot specify exactly what this bias is, nor can we conclude that it is necessarily a bad thing that GPT models have this bias — since as humans, we all have biases too.

What we can say is that models should be used thoughtfully, and probably not for business-critical purposes (for example, it can be risky to give an artificial intelligence authority over major strategic decisions).

Generative AI technologies are only as good as the inputs we provide them, and therefore proper use of the technology also requires understanding the opportunities and limitations that poor user inputs bring to the quality of the output.

Improper use of AI technologies can potentially damage your digital reputation, especially if you do not perform any quality control on the results presented to you. Search engine providers like Google do not yet have a clear policy on the matter.

3. Can the use of AI-generated content harm our digital reputation?

The short answer: Maybe. There is a risk that the use of AI-generated content can be traced and may lead to a “penalty” in terms of indexing/ranking on search engines such as Google. However, there has been no official statement on this from Google, which generally keeps its algorithms very close to the chest.

Can we use texts generated by artificial intelligence without, for example, being penalized by Google (e.g., in terms of SEO)?

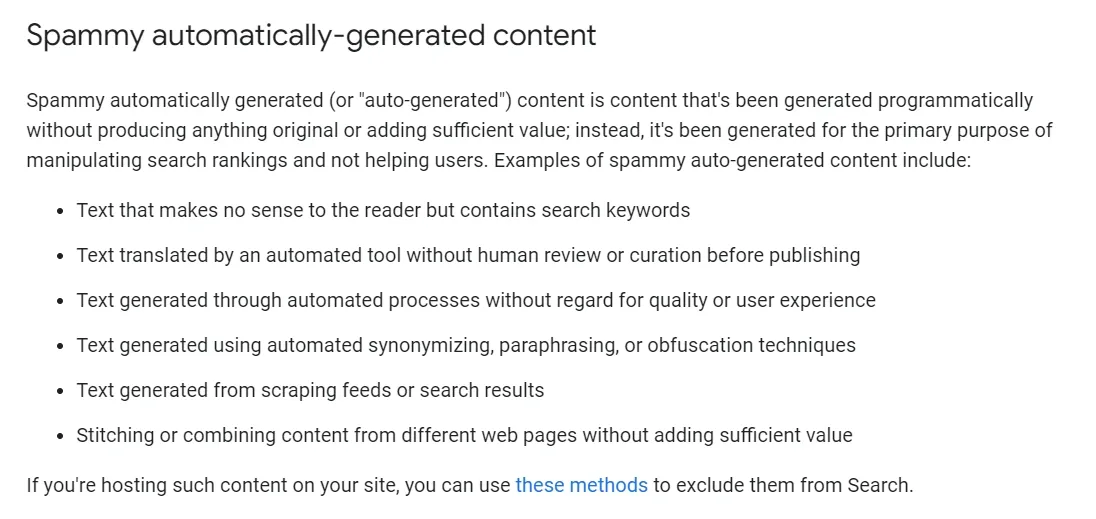

Again, the answer is not entirely clear. Google, which remains the largest search engine, has always maintained the position that they want to reduce the amount of “spammy content.” However, the definition of “spammy content” is not entirely clear.

In their developer guidelines, they write the following:

Source: Google Search Central (2023)

With a technology like ChatGPT at their fingertips, one could argue that many copywriters and marketing professionals today are able to produce even better articles, which ultimately benefits the end user — so how should Google respond to this?

Again, we’ll have to see what the future holds. Technologies like GLTR, which attempt to detect whether a text is AI-generated or not, could very well come into play as Google and other players try to determine whether a piece of content was written by AI.

The company SEO.ai has specialized in the use of AI for search engine optimization. In their latest take on how to effectively use artificial intelligence (e.g., ChatGPT) to strengthen SEO, their approach is to use ChatGPT as a sparring partner rather than as a standalone content creator.

Efter alt at dømme er den seneste generation af AI-teknologier som ChatGPT og Midjourney ikke mindre sikre, end andre SaaS-produkter. Der følger dog en stor mængde teknologien i halen på de nye kunstige intelligenser, der kan være udviklet af ondsindede aktører.

4. How secure is the technology?

The short answer: Secure enough that we feel confident using it without major reservations. But the entire tech world is currently boiling over with “smart technologies” that could potentially be harmful — so stay alert out there.

There is no indication that the security of using ChatGPT or Midjourney is any less reliable than that of other similar SaaS providers.

A single "breach" at OpenAI

As of March, there is only one known instance of an actual “breach” at OpenAI. In this case, data/prompts were shared with other users, meaning that an input from USER A could mistakenly be shown to USER B.

Most security challenges are related to phishing attempts, malware, and other malicious forms of attacks and technologies that surround the use of popular AI services.

Beware of new phishing methods and malware

With growing interest in using artificial intelligence in everyday life, many people are now searching for new AI tools to make life easier. Typically, this requires installing a plugin or providing some information or access to files and folders on your devices — which leaves us vulnerable as users.

A good rule of thumb for now is to be critical about what data you grant AI technologies access to, since we essentially do not know how that data is processed/stored.

Approach new AI technologies with great caution

Likewise, you should approach new AI technologies with extreme caution, as there are certainly many “hackers” out there looking to exploit the massive interest in AI technologies.

This also applies to the many Nigerian princes who will no doubt gain a richer vocabulary and better contextual understanding of us in the coming months.

What do we do now?

From our side of the table, the coming period will be spent pressure-testing the AI solutions that are entering the arena within our own field.

This includes, for example, Perfion’s new AI initiative, which offers an AI assistant directly in Perfion’s grid.

The broader political, legal, and societal implications that come with the use of AI will — like everyone else — be something we’ll have to wait and see about.

There are undoubtedly some great, productivity-enhancing applications of artificial intelligence that can be implemented in production environments across our clients’ operations without significant risk.